Part 1 – The False Sense of Physical Security

As is often the case when new paradigms are advanced, cloud computing as a viable method for sourcing information technology resources has met with many criticisms, ranging from doubts about the basic suitability for enterprise applications, to warnings that “pay-as-you-go” models harbor unacceptable hidden costs when used at scale. But perhaps the most widespread and difficult to repudiate is the notion that the cloud model is inherently less secure than traditional data center models.

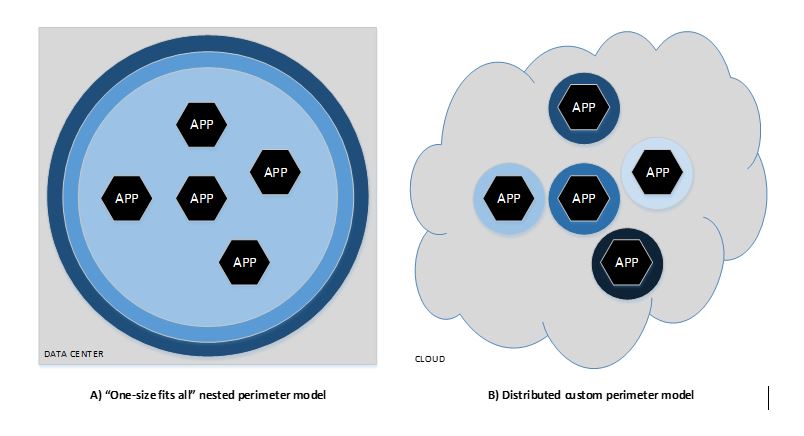

It is easy to understand why some take the position that cloud is unsuitable, or at the least very difficult to harness for conducting secure business operations. Traditional security depends heavily on the fortress concept, one that is ingrained in us as a species: We have a long history of securing physical spaces with brick walls, barbed wire fences, moats, and castles. Security practice has long advocated placing IT resources inside highly controlled spaces, with perimeter defenses as the first and sometimes only obstacle to would-be attacks. Best practice teaches the “onion model,” a direct application of the defense in depth concept, where there are castles within brick walls within barbed-wire fences, creating multiple layers of protection for the crown jewels at the center, as shown in Figure A below. This model is appealing because it is natural to assume that if we place our servers and disk drives inside a fenced-in facility on gated property with access controlled doors and locked equipment racks (i.e., a modern data center), then they are most secure. The fact that we have physical control over the infrastructures translates automatically to a sense that the applications and data that they contain are protected. Similarly, when those same applications and data are placed on infrastructure we can’t see, touch, or control at the lowest level, we question just how secure they really can be. This requires more faith in the cloud service provider than most are able to muster.

But the advent of the commercial Internet resulted in exponential growth of the adoption of networking services, and, today, Internet connectivity is an absolute “must have” for nearly all computing applications, not the novelty it was 20 years ago. The degree of required connectivity is such that most organizations can no longer keep up with requested changes in firewalls and access policies. The result is a less agile, less competitive business encumbered by unwieldy nested perimeter-based security systems, as shown in Figure A above. Even when implemented correctly, those traditional security measures often fail because the perimeter defenses cannot possibly anticipate all the ways that applications on either side of the Internet demarcation may interact.

The implication is that applications must now be written without assumptions about or dependencies upon the security profile of a broader execution environment. One can’t simply assume the hosting environment is secure, although that is still quite important, but for different reasons. More on this line of thinking, and the explanation of Figure B and why it is more desirable in the cloud era, in my next post.