Part 2 – The Compositional Frontier

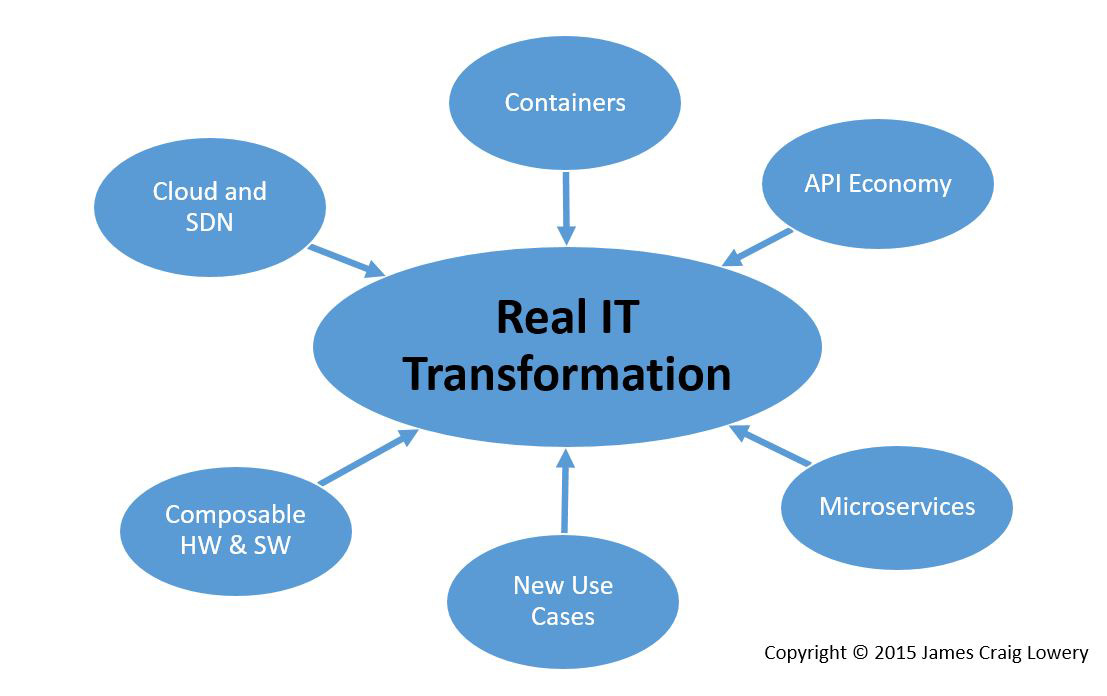

In my previous installment, I introduced the idea that the Information Technology industry is approaching, and perhaps is already beginning to cross, a major point of inflection. I likened it to a barrier synchronization, in that six threads (shown in the graphic below) of independently evolving activity are converging at this point to create a radically new model for how business derives value from IT. In the next six parts, I’ll tackle each of the threads and explain where it came from, how it evolved, and why its role is critical in the ensuing transformation. We’ll start in the lower left corner with “Composable Hardware and Software.”

The Hardware Angle

Although hardware and software are usually treated quite differently, the past 15 years have taught us that software can replace hardware at nearly every abstraction level in which we find the latter above the most primitive backing resources. The success of x86 virtualization is a primary example, but it goes far beyond simply replacing or further abstracting physical resources into virtual ones. Server consolidation was the initial use case for this technology, allowing us to sub-divide and dynamically redistribute physical resources across competing workloads. Soon, a long list of advantages that a software abstraction for physical resources can provide followed: Live migration, appliance packaging, snapshotting and rollback, and replication and disaster recovery demonstrated that “software defined” meant “dynamic and flexible”, and this characteristic is highly valued by the business because it further enables agility and velocity.

Clearly, the ability to manage large pools of physical resources (e.g., an entire datacenter rack or even an aisle) to compose “right sized” virtual servers is highly valued. Although hypervisor technology made this a reality, it did so with a performance penalty, and by adding an additional layer of complexity. This has incentivized hardware manufacturers to begin providing composable hardware capabilities in the native hardware/firmware combinations that they design.

Although one could argue that this obviates hypervisor virtualization, it does not solve or provide all the functionality that hypervisors and their attendant systems management ecosystems do. However, when composable hardware is combined with container technology, they do begin to erode the value of “heavy” server virtualization. The opportunity for hypervisor vendors is to anticipate this coming change and extend their technologies and ecosystems to embrace these value shifts, and ease their users’ transition within a familiar systems management environment. (VMware’s recent Photon effort is cut from this strategy.)

The Software Angle

Modular software is not new. The landscape is littered with many attempts, some successful, at viewing applications as constructs of modular components that can be composed in much the same way as one would build a Lego or Tinker Toy project. Service Oriented Architecture is the aegis under which most of these efforts fall, in which the components are seen as service providers, and a framework enables the discovery and binding of them into something useful. The figure below shows how two different applications (blue and green) comprise their own master logic (“main” routine) and call outs to 3rd party applications, one of which they have in common. Such deployments are quickly becoming commonplace and will eventually be the norm.

The success of such a model depends on two things: 1) accessible, useful, ready-to-use content, and; 2) a viable, well-defined, ubiquitous framework. In other words, you need to be able to source the bricks quickly, and they should fit together pretty much on their own, without a lot of work on your part.

Until just recently, success with this model has been limited to highly controlled environments such as the large enterprise with its resources and fortitude to train developers and build the environment. The frameworks are Draconian in their requirements, and the content limited to either what you build yourself, or pay for dearly. It has taken the slow maturation of open source and the Internet to bring the concept to the masses, and it looks quite different from traditional SOA. (I call it “loosey goosey SOA.”)

In the Web 2.0 era, service oriented architecture (note the lack of capitalization) is driven much more by serendipity than a master framework. Basic programming problems (lists, catalogs, file upload, sorts, databases, etc.) have been solved many times over, and high quality code is available for free if you know where to look. Cheap cloud services and extensive online documentation for every technology one could contemplate using allow anyone to tinker and build. There is no “IT department” saying “NO!” – so a younger generation of potentially naïve programmers is building things in ways that evoke great skepticism from the establishment.

But they work. They sell. They’re successful. They’re the future. And that is all that the market really cares about.

So, technical dogmatism bows to pragmatism, and the new model of compositional programming continues to gain momentum. The “citizen programmer,” who is more of a compositional artist than a coder, gains more power while the traditional dependency-solving IT department is diminished, replaced by automation in the cloud. The problems of this model – its brittleness, lack of definition, and so forth – are now themselves the target of new technology development. This is where software companies who want to stay in business will look to find their relevance with solutions that emphasize choice of services, languages, and data persistence because they must – developers will not tolerate being dictated to in these matters.

“…like rama lama lama ka dinga da dinga dong…”

The trend toward greater flexibility, especially through the use of compositional techniques, is present in both hardware and software domains. That these trends complement each other should be no surprise. They “go together.” As we build our applications from smaller, more short-lived components, we’ll need the ability to rapidly (in seconds) source just the right amount of infrastructure to support them. This rapid acquire/release capability is critical to achieving maximum business velocity at the lowest possible cost.

“That’s the way it should be. Wah-ooh, yeah!”