Part 2 – Distributing the Security Perimeter

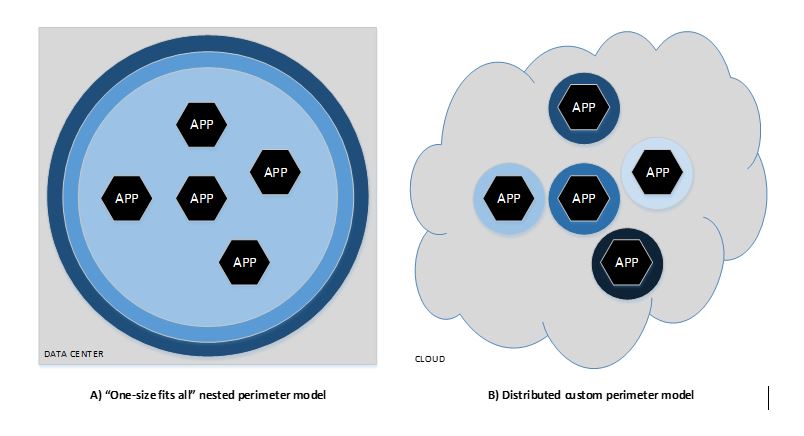

In part 1 of this series, we examined the fallacy that physical ownership and control of hardware, combined with multi-layered perimeter defense strategies, leads to the most secure IT deployments. In the hyper-connected cloud era, this concept doesn’t hold. In private data centers that rely on this model (see figure A below), a breach in one co-resident system typically exposes others to attack. Indeed, some of the most successful viral and worm attacks follow the model of gaining entry first, then using information gleaned from the most vulnerable systems to break into others in the same data center without having to navigate the “strong” outer perimeter again. One analysis of this type of leap-frog attack leads us to the conclusion that it is actually not a good example of defense-in-depth. Defense in depth would dictate that, having gained new information from attacking one system, the attacker has at least as difficult of a time using the new-found knowledge to get to its next victim as it did this one.

Applications that are written to run “in the cloud” make very few assumptions about the security of the cloud service as a whole. Concepts such as a firewall move from the data center’s network boundary to the application’s network boundary, where rules can be configured to suit exactly the requirements of the application, rather than the aggregate requirements of all apps within the data center. This distributed custom-perimeter model is shown in figure B, below. By forcing applications to take on more responsibility for their own security, we make them more portable. They can run in a private cloud, public cloud, on or off premises. The degree to which ownership and proximity of the service affect the security of the application is much smaller when the application is designed with this self-enforced security model in mind.

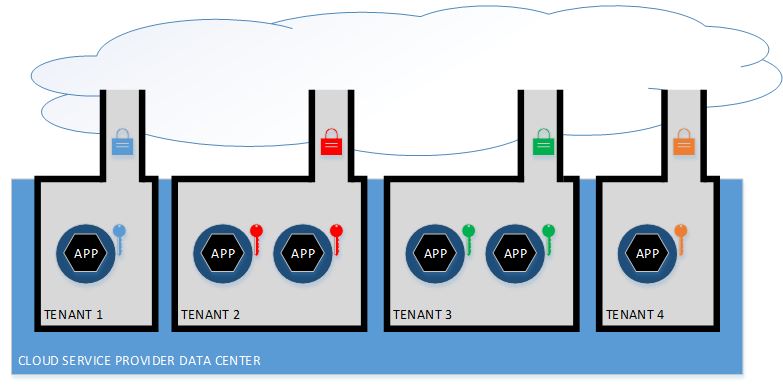

Although applications should not rely on data center security for their own specific needs, security at the data center level is still critical to overall success of the cloud model. Since the application relies heavily on the network connection to implement persistence and access, the application developer/operator has the responsibility of configuring the cloud services for that application to achieve those specific goals. The cloud service provider (CSP) is responsible to deliver a reliable, secure infrastructure service that, once configured for the application, maintains that configuration in a secure and available fashion, as shown in the figure below. Each tenant’s apartment is constructed by the CSP in accordance with the tenant’s requirements, such as size and ingress/egress protections. The CSP further guarantees the isolation of the tenants as part of its security obligations.

As an example, let us return to our firewall scenario, but with a bit more detail. Suppose an application requires only connections for HTTP and HTTPS for all communication and persistence operations. Upon deployment, the cloud service is configured to assign an Internet Protocol address and associated Domain Name System name to the application, and to implement rules to admit only HTTP and HTTPS traffic from specific sources to the application’s container. Subsequent to deployment, the cloud service must insure that the IP address and DNS names are not changed, that the firewall rules are not altered, that the rules are enforced, and that isolation of the application from other applications in other tenancies is strictly enforced. In this way, the cloud service provider’s role is cleanly separated from that of the application developer/operator because the CSP does not know or care what the firewall rules are. It only knows that it must enforce the ones configured. This is in stark contrast to traditional data centers where firewall rules are a merge, often with conflicts, of the various rules each application and subsystem behind the firewall might require.

In my third and final post in this series, I’ll further expound the virtues and caveats of this model and how, when properly implemented, it solves the security problem for applications in the most general case: public cloud.